I’ve been meaning to write a post describing this simple concept. The main idea is to create a pattern for loading “bits” of a webpage asynchronously, particularly MVC partial views. I find this technique useful because I tend abuse partial views to maximize code reusability. I’ll be explaining this simplified example to describe how I do it.

Let’s start with the basic, imagine you have a simple MVC application with one controller and four actions, like so:

|

public class AsyncController : Controller { [HttpGet] public ViewResult List() { var users = UserService.GetUsers(); return View(users); } [HttpGet] public ViewResult Details(int Id) { var users = UserService.GetUser(Id); return View(users); } [HttpGet] public ViewResult Edit(int Id) { var users = UserService.GetUser(Id); return View(users); } [HttpPost] public ViewResult Edit(UserModel model) { if (ModelState.IsValid) { UserService.UpdateUser(model); ViewBag.Result = “OK”; }else { var res = ModelState.Values; ViewBag.Result = “NOK”; } return View(model); } } |

|

public class UserModel { public int Id { get; set; } [Required(ErrorMessage = “Field can’t be empty”)] public string Name { get; set; } [DataType(DataType.EmailAddress, ErrorMessage = “E-mail is not valid”)] public string Email { get; set; } } |

So, pretty standard. For clarification I will start with the List view.

|

@model IEnumerable<Demo.AsyncControls.Models.UserModel> @{ ViewBag.Title = “List”; Layout = “~/Views/Shared/_Layout.cshtml”; } <div> <h2>List</h2> <table class=”table”> <tr> <th>@Html.DisplayNameFor(model => model.Name)</th> <th>@Html.DisplayNameFor(model => model.Email)</th> <th></th> </tr> @foreach (var item in Model) { <tr> <td>@Html.DisplayFor(modelItem => item.Name)</td> <td>@Html.DisplayFor(modelItem => item.Email)</td> <td> @Html.ActionLink(“edit”, “Edit”, new { Id = item.Id}) | @Html.ActionLink(“details”, “Details”, new { Id = item.Id }) </td> </tr> } </table> </div> |

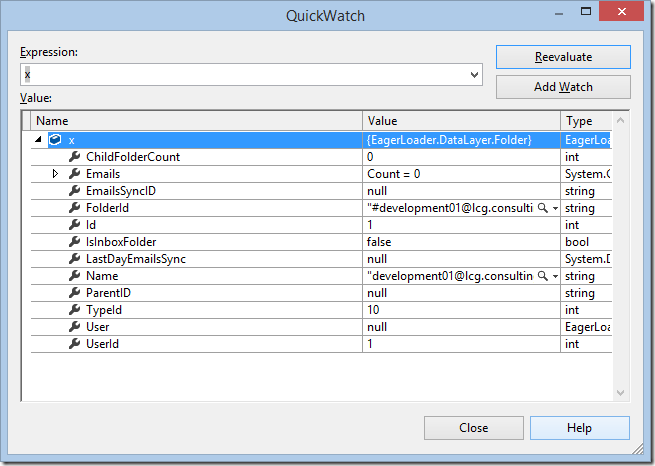

This generates something similar to this.

So far I’ve described the basics of an MVC application with 1 controller, 3 views and 4 actions.

The challenge now is to make everything load asynchronously. Quoting David Wheeler: “All problems in computer science can be solved by another level of indirection”, so my solution is to do just that. The main idea is to have all the relevant dynamic bits of information, in this case the list, outside of the page and into a partial view.

So the view would look this

|

@using Demo.AsyncControls.Helpers @{ ViewBag.Title = “List”; Layout = “~/Views/Shared/_Layout.cshtml”; } <div id=”list” class=”loader”> <script> $(document).ready(function () { window.async.getFromController(‘/Async/AsyncList’, ‘list’, null); }); </script> |

This javascript function will do the heavy lifting and load the partial view (explained ahead). The partial view looks like this:

|

@using Demo.AsyncControls.Helpers @model IEnumerable<Demo.AsyncControls.Models.UserModel> <div id=”list”> <h2>List</h2> <table class=”table”> <tr> <th>@Html.DisplayNameFor(model => model.Name)</th> <th>@Html.DisplayNameFor(model => model.Email)</th> <th></th> </tr> @foreach (var item in Model) { <tr> <td>@Html.DisplayFor(modelItem => item.Name)</td> <td>@Html.DisplayFor(modelItem => item.Email)</td> <td> @Html.ActionLink(“edit”, “Edit”, new { Id = item.Id}) | @Html.ActionLink(“details”, “Details”, new { Id = item.Id }) </td> </tr> } </table> </div> |

Notice that the model went into the partial view.

The controller now looks like this:

|

[HttpGet] public ViewResult List() { return View(); } [HttpGet] public PartialViewResult AsyncList() { var users = UserService.GetUsers(); return PartialView(users); } |

Let’s take a closer look at the javascript that makes this possible.

|

(function () { window.async = {}; window.async.disabledClass = ‘disabled’; window.async.getFromController = function (url, name, model) { var obj = $(‘#’ + name); ajaxCall(url, obj, model, ‘GET’); } window.async.postToController = function (url, name) { var obj = $(‘#’ + name); var form = obj.find(‘form’); form.submit(function (event) { var model = $(this).serializeArray(); ajaxCall(url, obj, model, ‘POST’); event.preventDefault(); }); } function ajaxCall(url, obj, model, verb) { $.ajax({ cache: false, type: verb, url: url, data: model, beforeSend: function () { obj.addClass(window.async.disabledClass); }, success: function (data) { obj.replaceWith(data); }, error: function (xhr, ajaxOptions, thrownError) { //log this information or show it… obj.replaceWith(xhr.responseText); }, complete: function () { obj.removeClass(window.async.disabledClass); updateURL(url, model, verb); } }); } function updateURL(action, model, verb) { if (history.pushState) { var split = action.split(‘/’); split[split.length – 1] = split[split.length – 1].replace(‘Async’, ”); action = split.join(‘/’); if (model != null && verb != ‘POST’) action = action + ‘?’ + $.param(model); var newurl = window.location.protocol + ‘//’ + window.location.host; newurl = newurl + action; window.history.pushState({ path: newurl }, ”, newurl); } } })(); |

The first two functions (getFromController and postToController) handle the information and GET or POST to a controller. POST also serializes the form element before sending to the controller (var model = $(this).serializeArray()), so this code expects that you are sending information from within a form element and that form exists within the indicated element.

The function (updateURL) manages the URL so you can use URL sharing (without this feature the actions would not cause the URL to change and your users could not share a link). This code expects that all partials views using this technique are prefixed with “Async” and named with the name of the corresponding View, in this example “AsyncList”. You will notice that you fetch the information in the Async View and the URL is changed (without post back) to the corresponding view.

To make things a bit simpler, let’s use an HTML helper to hide the script tag in the List View. Let’s go ahead and do a couple of more options.

|

public class AsyncLoader { public static MvcHtmlString Render(string Controler, string Action, string PlaceHolder) { return AsyncLoader.Render(Controler, Action, PlaceHolder, null); } public static MvcHtmlString Render(string Controler, string Action, string PlaceHolder, dynamic Model) { var model = ObjectToJason(Model); var html = $@” $(document).ready(function(){{ window.async.getFromController(‘/{Controler}/{Action}‘, ‘{PlaceHolder}‘, {model});}}); “; return MvcHtmlString.Create(html); } public static MvcHtmlString Action(string Controler, string Action, string PlaceHolder, string Link) { return AsyncLoader.Action(Controler, Action, PlaceHolder, Link, null); } public static MvcHtmlString Action(string Controler, string Action, string PlaceHolder, string Link, dynamic Model) { var model = ObjectToJason(Model); var html = $@” $(‘#{Link}‘).click(function(){{ window.async.getFromController(‘/{Controler}/{Action}‘, ‘{PlaceHolder}‘, {model}); }}); “; return MvcHtmlString.Create(html); } public static MvcHtmlString Post(string Controler, string Action, string PlaceHolder) { var html = $@” window.async.postToController(‘/{Controler}/{Action}‘, ‘{PlaceHolder}‘); “; return MvcHtmlString.Create(html); } } |

There are 3 operations here, Render is used lo load a control. Action is used to load a control on click and Post is used to post the form into the controller. The “PlaceHolder” parameter represents the HTML element that will be replaced with the loaded content.

The missing serialization code is shown next:

|

private static JsonSerializerSettings CreateSerializerSettings() { var serializerSettings = new Newtonsoft.Json.JsonSerializerSettings(); serializerSettings.MaxDepth = 5; serializerSettings.ReferenceLoopHandling = Newtonsoft.Json.ReferenceLoopHandling.Ignore; serializerSettings.MissingMemberHandling = Newtonsoft.Json.MissingMemberHandling.Ignore; serializerSettings.NullValueHandling = Newtonsoft.Json.NullValueHandling.Ignore; serializerSettings.ObjectCreationHandling = Newtonsoft.Json.ObjectCreationHandling.Reuse; serializerSettings.DefaultValueHandling = Newtonsoft.Json.DefaultValueHandling.Ignore; return serializerSettings; } private static string ObjectToJason(object obj) { var json = “null”; if (obj == null) return json; try { var serializerSettings = CreateSerializerSettings(); json = Newtonsoft.Json.JsonConvert.SerializeObject(obj, Newtonsoft.Json.Formatting.None, serializerSettings); } catch { //log this } return json; } |

Now we can rewrite the List as such:

|

@using Demo.AsyncControls.Helpers @{ ViewBag.Title = “List”; Layout = “~/Views/Shared/_Layout.cshtml”; } <div id=”list” class=”loader”> <h4>Loading…</h4> @AsyncLoader.Render(“Async”, “AsyncList”, “list”) </div> |

We must remember that the loading script will replace the content into the indicated list. So this code, after loaded, will completely replace the content with the returned AsyncList.

So let’s see the final Views and Partial Views.

Details (View and Partial View):

|

@using Demo.AsyncControls.Helpers @model int @{ ViewBag.Title = “Details”; Layout = “~/Views/Shared/_Layout.cshtml”; } <div id=”list” class=”loader”> @AsyncLoader.Render(“Async”, “AsyncDetails”, “list”, new { Id = Model }) </div> |

|

@using Demo.AsyncControls.Helpers @model Demo.AsyncControls.Models.UserModel

<div id=”details”> <h2>Details</h2> <h4>UserModel</h4> <hr /> <dl class=”dl-horizontal”> <dt>@Html.DisplayNameFor(model => model.Name)</dt> <dd>@Html.DisplayFor(model => model.Name)</dd> <dt>@Html.DisplayNameFor(model => model.Email)</dt> <dd>@Html.DisplayFor(model => model.Email)</dd> </dl> <a id=”link-details” href=”#” class=”btn btn-primary”>edit</a> @AsyncLoader.Action(“Async”, “AsyncEdit”, “details”, “link-details”, new { Id = Model.Id }) <a id=”link-list” href=”#” class=”btn btn-primary”>list</a> @AsyncLoader.Action(“Async”, “AsyncList”, “details”, “link-list”) </div> |

Edit (View and Partial View):

|

@using Demo.AsyncControls.Helpers @model int @{ ViewBag.Title = “Edit”; Layout = “~/Views/Shared/_Layout.cshtml”; } <div id=”list” class=”loader”> @AsyncLoader.Render(“Async”, “AsyncEdit”, “list”, new { Id = Model }) </div> |

|

@using Demo.AsyncControls.Helpers @model Demo.AsyncControls.Models.UserModel <div id=”edit”> <h2>Edit</h2> @using (Html.BeginForm()) { @Html.AntiForgeryToken() <div class=”form-horizontal”> <h4>UserModel</h4> <hr /> @Html.ValidationSummary(true, “”, new { @class = “text-danger” }) @Html.HiddenFor(model => model.Id) <div class=”form-group”> @Html.LabelFor(model => model.Name, htmlAttributes: new { @class = “control-label col-md-2” }) <div class=”col-md-10″> @Html.EditorFor(model => model.Name, new { htmlAttributes = new { @class = “form-control” } }) @Html.ValidationMessageFor(model => model.Name, “”, new { @class = “text-danger” }) </div> </div> <div class=”form-group”> @Html.LabelFor(model => model.Email, htmlAttributes: new { @class = “control-label col-md-2” }) <div class=”col-md-10″> @Html.EditorFor(model => model.Email, new { htmlAttributes = new { @class = “form-control” } }) @Html.ValidationMessageFor(model => model.Email, “”, new { @class = “text-danger” }) </div> </div> <div class=”form-group”> <div class=”col-md-offset-2 col-md-10″> <input type=”submit” value=”Save” class=”btn btn-default” /> @if (ViewBag.Result != null) { @ViewBag.Result } </div> </div> </div> <a id=”link-details” href=”#” class=”btn btn-primary”>details</a> @AsyncLoader.Action(“Async”, “AsyncDetails”, “edit”, “link-details”, new { Id = Model.Id }) <a id=”link-list” href=”#” class=”btn btn-primary”>list</a> @AsyncLoader.Action(“Async”, “AsyncList”, “edit”, “link-list”) @AsyncLoader.Post(“Async”, “AsyncEdit”, “edit”) } </div> |

And now, the controller:

|

public class AsyncController : Controller { [HttpGet] public ViewResult List() { return View(); } [HttpGet] public ViewResult Details(int Id) { return View(Id); } [HttpGet] public ViewResult Edit(int Id) { return View(Id); }

[HttpGet] public PartialViewResult AsyncList() { var users = UserService.GetUsers(); return PartialView(users); } [HttpGet] public PartialViewResult AsyncDetails(int Id) { var user = UserService.GetUserById(Id); return PartialView(user); } [HttpGet] public PartialViewResult AsyncEdit(int Id) { var user = UserService.GetUserById(Id); return PartialView(user); } [HttpPost] public PartialViewResult AsyncEdit(UserModel model) { if (ModelState.IsValid) { UserService.UpdateUser(model); ViewBag.Result = “OK”; } else { var res = ModelState.Values; ViewBag.Result = “NOK”; } return PartialView(model); } } |

And that’s it, with this simple example you can load your partial views asynchronously. If your main menu also uses this technique (and extending this a little bit) you will be able to use MVC as a SPA with minimum adaptation of existing application (assuming they are straight forward).

That’s it, happy coding…

The code is here https://gofile.io/?c=53jJHY

![clip_image002[5] clip_image002[5]](https://srramalho.files.wordpress.com/2017/05/clip_image0025_thumb1.jpg?w=514&h=270)

![clip_image001[10] clip_image001[10]](https://srramalho.files.wordpress.com/2017/02/clip_image00110_thumb.png?w=494&h=448)

![clip_image003[10] clip_image003[10]](https://srramalho.files.wordpress.com/2017/02/clip_image00310_thumb.jpg?w=452&h=110)

![clip_image005[10] clip_image005[10]](https://srramalho.files.wordpress.com/2017/02/clip_image00510_thumb.jpg?w=601&h=135)